Deep Learning

Overview & Service Offerings

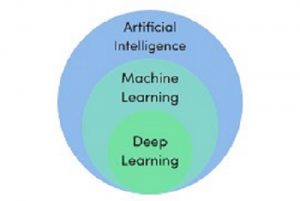

Deep Learning’s Place in the

AI ecosystem

Deep Learning is a subset of Machine Learning, which is a subset of Artificial Intelligence(AI).

Summary of Deep Learning Characteristics

Deep Learning algorithms use neural networks to solve problems.

Deep Learning vs Machine Learning

Deep Learning embeds feature extraction into the hidden part of the neural network, while machine learning feature extraction is executed prior to the neural network, among other differences.

The development of a backpropagation algorithm, allows neural networks to perform calculations which allow the discovery of their own internal representations of datasets. This allows neural nets to solve problems.

Of course, backpropagation in neural networks is always proceeded by ‘feed-forward’, by definition. One may assume that virtually all neural networks utilize a ‘feed-forward’ architecture. The only exceptions are ‘Restricted Boltzmann Machines’, and ‘Recurrent Neural Networks.’

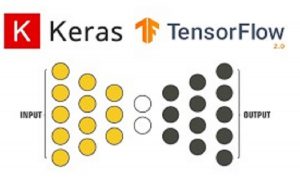

Preferred Solutions Framework

Creation of neural networks at the foundation of Deep Learning requires the manipulation of vectors. Tensorflow uses graph based execution of tensor operations. Although numerous solutions exist to build Deep Learning neural networks, our preferred solution is Tensorflow and Python Numpy. Our team also utilizes Pandas dataframes to process tabular data.

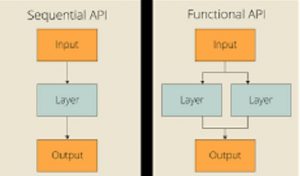

Our team prefers to utilize an API which sits on top of Tensorflow, called Keras. Keras serves an abstraction to actual TensorFlow models and methods. Keras provides two model types to support Tensorflow;

- Sequential Model

- Functional API

The Functional API allows the construction of solutions for more complex problems.

Measuring Success & Error for Deep Learning Models

Our team divides success & error into three groups;

- binary classification

- multi-class classification

- regression

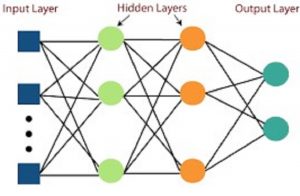

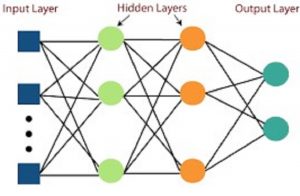

Multi-Layer Perceptron

A basic progression towards Deep Learning models requires a visit to the concept of ‘Multi-Layer Perceptron’. A Multi-Layer Perceptron is a class of a feedforward neural network.

MLP’s consists of at least three layers of nodes:

- an input layer,

- a hidden layer, and an

- output layer.

Except for the input nodes, each node is a neuron that uses a nonlinear activation function. MLP’s always use a supervised learning technique called backpropagation for training. An MLP can distinguish data that is not linearly separable. MLP’s are considered to be the most basic of Deep Learning neural networks. Consequently, MLP’s deliver very limited functionality, even for a Deep Learning solution.

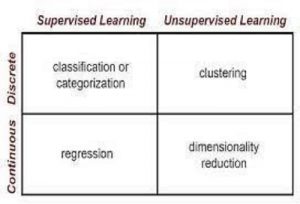

Deep Learning Categories

Deep Learning is split into two supervision algorithm type categories;

- Supervised vs

- Unsupervised

Another axis, for each, is;

- Continuous

- Discrete

Sub-categories of Supervised Deep Learning include;

- Semi-Supervised &

- Reinforcement

Specific unsupervised Deep Learning algorithms include;

- Autoencoders

- Deep Autoencoders

- Variational Autoencoders

- Restricted Boltzmann Machines.

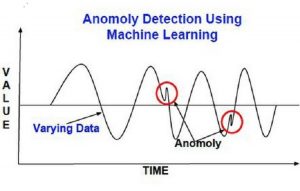

General classes of Unsupervised Deep Learning algorithms include;

- Clustering &

- Anomaly detection

Along with a number of others.

Unsupervised Deep Learning could be characterized as learning which is ‘Passive’, with unlabeled datasets. Note also that Unsupervised Learning does not have the capability of learning new things outside the limitations given by the provided dataset. This is exactly opposite from Supervised Learning, which can learn new things outside of the provided dataset.

Of all of the Unsupervised Deep Learning algorithms/classes, the team at Synthys Medical has focused on solutions based on primarily one;

*Anomaly detection

Our experience strongly suggests that ‘anomaly detection’ routinely provides the highest payoff of the Unsupervised Learning solution options.

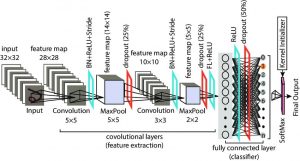

Convolutional Neural Network

One of the most powerful specialized Deep Learning solutions is referred to as a Convolutional Neural Network. CNNs are powerful because they have solved some of the most challenging problems in computer vision. CNN’s regularly solve the most complex object recognition tasks.

One of the keys to the success of CNNs is their unique ability to encode spatial relationships.

The ability of CNN’s to encode highly correlated spatial information is not limited to vision applications. It also extends to audio, and text. More specifically, possible audio and text applications include;

- audio processing and classification

- image denoising

- image super-resolution

- text summarization and other text-processing & classification tasks, & last but not least,

- data encryption

As a technical aside, ‘convolution’ is a technical term commonly associated with signal processing. Convolution applications in signal processing could not come to fruition prior to the development of the fast Fourier transform (FFT) algorithm.

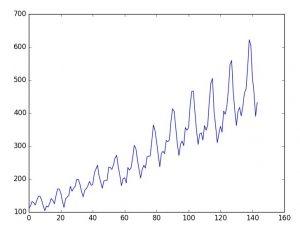

Recurrent Neural Networks & Time Series Forecasting

Recurrent Neural Networks(RNN’s) are a very ‘odd duck’. RNN’s possess layers that are able to handle internal memory learning to remember, or forget, specific patterns found in datasets. In addition, recurrent networks are most powerful in the case of inferring patterns that are temporal(relating to time) or sequential.

Specific application RNN models include;

- Long short-term memory models

- Sequence-to-vector models

- Vector-to-sequence models

- Sequence-to-sequence models

The most common alternative to using RNN’s for Time Series, is ARIMA (Autoregressive Integrated Moving Average).

Possible Problems with RNN’s

One of the possible problems with RNN’s, is that they have an ability to introduce ‘historical’ bias into the model. This bias can then lead to undesired discrimination.

Long short-term memory (LSTM’s) models

LSTM’s are an improved version of Recurrent Models. LSTM’s are implemented as a layer, which is always between the embedding layer and the dropout layer. LSTM’s converge much faster than CNN’s; but CNN’s are more stable than LSTM’s.

LSTMs are great in encoding highly correlated spatial information, such as images, or audio, or text, just like CNNs.

Generative Adversarial Networks (!)

There are two primary types of GAN’s;

- Black Box

- Insider

Both, of course, have neural nets that are competing against one-another. For the Insider model, the adversary has an influence on the outcome of a model which is trained not to be fooled by such an adversary. This Insider model will be the focus of our discussion.

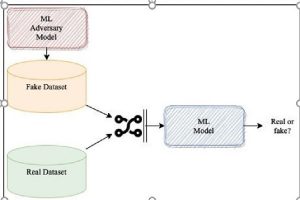

In every scenario here, a machine learning model is created to be an adversary that produces fake inputs.

Adversarial Learning

In this approach, we have two models;

- machine learning main model &

- adversarial model.

The machine learning model needs to learn to distinguish between true inputs and fake ones. If a mistake is made, the ml model needs to learn to adjust itself to make sure it properly recognizes true input. On the other hand, the adversary will need to keep producing fake inputs with the goal of making the ml model fail.

For each model, success looks as follow:

- main machine learning main model is successful if it can correctly distinguish fake from real input.

- adversary model is successful if it can fool the machine learning main model into passing fake input as real

One’s success is the failure of the other, and vice versa.

During the learning process, the main machine learning model will continuously call for batches of real and fake data to learn, adjust, and repeat until a performance threshold is satisfied, or some other stopping criteria have been met.

In adversarial learning, there is no specific requirement on the adversary, other than to produce fake data.

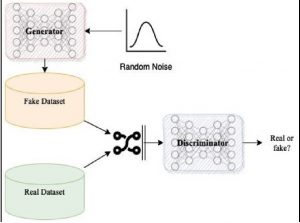

In this case, we want to maximize the correct predictions of the discriminator, while, at the same time, minimize the error of the generator. This is accomplished by producing a sample that does not fool the discriminator. Success is determined via the standard cross-entropy loss function.

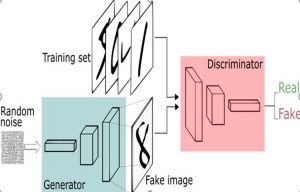

The more basic of two options for “Insider” GANs, is a simple MLP-based model. The more sophisticated approach is to use a convolutional GAN. Our team focuses efforts on the more sophisticated approach, commonly referred to as a DCGAN. (Deep Convolutional GAN).

The primary goal is to make a series of convolutional layers learn feature representations to produce fake images or to distinguish between valid or fake images.

In our approach, we call the discriminator network, by a different name, ‘critic’ In the case of either discriminator or critic, the network that is tasked with determining whether input is valid (from the original dataset) or fake (from an adversarial generator).

DCGANs are very powerful, and have yielded amazing research and applications. For example, even though DCGANs are based on Convolutional Neural Networks (CNN’s), which are commonly associated with vision applications, the DCGANs with the most amazing results were created in application areas that had nothing to do with vision. As an example, DCGANs are now being used to build designer pharmaceuticals.

Interestingly, DCGAN’s have also been deployed to successfully expose the vulnerabilities of other systems.

If a high-end machine learning model ever creates a solution with a $ billion dollar payoff $, it will almost certainly be a DCGAN that does so.

Not surprisingly, DCGANs are one of the primary application focus areas for Synthys Medical.

We WILL deliver the solution that you need !

As a first step, we will be delighted to answer any and all of your questions !