Google Cloud Platform

The Google Cloud Platform (GCP) is a portfolio of cloud computing services and solutions, originally based around the initial Google App Engine framework for hosting web applications from Google’s data centers. (The Google App Engine was originally launched in 2008). GCP is now widely regarded as one of the top three premier cloud computing platforms available. However, it still trails Amazon Web Services (AWS) and Microsoft Azure in market share. GCP’s pricing models are very different from those of AWS or Azure.

Following the introduction of Google App Engine, Google later released a variety of complementary tools, including a data storage layer, and Google Compute Engine, which is Infrastructure as a Service (IaaS), and supports the use of virtual machines. Once establishing itself as an IaaS provider, Google added additional products including;

- a load balancer,

- DNS, monitoring tools, and

- data analysis services

This brought GCP closer to functional parity with AWS and Azure, making them much more competitive in the cloud market.

Even though it has drawn closer to the functionality offered by AWS, GCP is no ‘cookie-cutter’ version of AWS. GCP apparently seeks to differentiate itself, through a hybrid cloud and multi-cloud strategy.

Google Cloud

Specific Tools & Applications

We will now look at specific services, tools and applications by functional area. The insights and details provided are often rather different , and more specific, than the ‘architectural framework’ perspective delivered at the GCP-1 page.

Options groups assigned to each of these solutions scenarios may be found next;

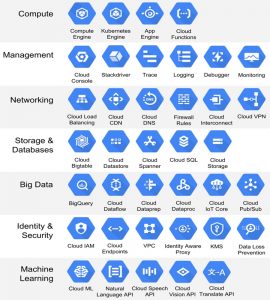

The next graphic, below, provides a summary of Google Compute Platform’s services, tools, and applications, by functional area, with the following technical/functional areas referenced;

- Compute

- Management

- Networking

- Storage & Databases

- Big Data

- Identity and Security &

- Machine Learning

The the table below lists every service, tool, & application by functional area, matching the content in the graphic immediately above. Clicking on each of the links associated with headers and content items below, will take the reader to a detailed review of each area;

Compute

- Compute Engine

- Kubernetes Engine

- App Engine

- Cloud Functions

management

- Cloud Console

- Stackdriver

- Trace

- Logging

- Debugging

- Monitoring

networking

- Cloud Load Balancing

- Cloud CDN

- Cloud DNS

- Cloud Firewall Rules

- Cloud Interconnect

- Cloud VPN

Storage & Database

- Cloud Bigtable

- Cloud Datastore

- Cloud Spanner

- Cloud SQL

- Cloud Storage

big datA

- Big Query

- Cloud Dataflow

- Cloud Dataprep

- Cloud Dataproc

- Cloud IoT Core

- Cloud Pub/Sub

identity & security

- Cloud IAM

- Cloud Endpoints

- VPC

- Identity Aware Proxy

- KMS

- Data Loss Prevention

machine Learning

- Cloud ML

- Natural Language API

- Cloud Speech API

- Cloud Vision API

- Cloud Translate API

Google Cloud Compute Services

Google Cloud Compute Services consists of four components;

- Cloud Functions

- App Engine

- Kubernetes Engine

- Compute Engine

Each of these abstracts a different part of the solutions architecture, as follows;

- Cloud Functions abstracts the application layer, and provides a control surface for service invocations

- App Engine abstracts the infrastructure, and provides a control surface at the application layer

- Kubernetes Engine abstracts the Virtual Machines (VM’s), and provides a control surface for managing Kubernetes cluster and related hosted containers

- Compute Engine abstracts the underlying hardware and provides a control surface for infrastructure components

Before we expand on each of the four families of architectural frameworks, and the twenty one options they include, we want to provide a preview of the graphics we’ll be using, next;

We’ll now perform a detailed review of these four Architectural Frameworks, the twenty-one options they include, and the one-hundred-fourteen specific solutions they cover, next;

Compute Engine

GCP’s Compute Engine is Google’s Infrastructure-as-a-Service ( IaaS ) offering that ;

- facilitates the creation of Virtual Machines (VM‘s)

- allocation and assignment of CPU and memory

By default, each Compute Engine instance has a single boot persistent disk (PD) that contains the operating system. Persistent disks(PD’s) are durable network storage devices that instances can access ‘like’ physical disks in a desktop or a server. The data on each persistent disk is distributed across several physical disks. Google Compute Engine(GCE) manages the physical disks and the data distribution automatically to ensure redundancy and optimal performance.

Applications on GCE commonly require additional storage space. Administrators can add one or more additional storage solutions to their instance. The eight available options include;

- Zonal standard PD

- Regional standard PD

- Zonal balanced PD

- Regional balanced PD

- Zonal SSD PD

- Regional SSD PD

- Local SSDs

- Cloud Storage bucket

The first seven options listed can be used to satisfy block storage requirements. The final option, ‘Cloud storage bucket‘, can only be used for object storage and not block storage.

Compute Engine is used to support a wide variety of architectural solutions referenced at GCP-1, in fact effectively all of them. These will not be listed here again.

Kubernetes Engine

GCP’s Kubernetes Engine (GKE) provides a sophisticated managed environment for deploying, controlling, and scaling containerized applications using GCP’s infrastructure. The GKE environment is constructed of multiple machines, always Compute Engine virtual machine instances, that are grouped together to form a cluster.

GKE clusters are powered by the Kubernetes open source cluster management system, which was originally developed by Google. Kubernetes is now under the control of the “Cloud Native Computing Foundation”, a project of the Linux Foundation. Note however, that Kubernetes can run on Microsoft Windows Server. Specifically, this is accomplished by installing Kubernetes on a Windows Server ‘worker node’, linked to a Linux control plane node.

Kubernetes is a container orchestrator. In order for a Kubernetes cluster to run, it must use a ‘container runtime’. Kubernetes is commonly used with the Docker container runtime, but it can be used with any compatible container runtime, including but not limited to RunC, cri-o, and containerd.

Google makes heavy use of Kubernetes and related design principles, to deliver commonly accessed Google services. Kubernetes benefits include the following;

- automatic management,

- monitoring and availability probes for application containers,

- automatic scaling,

- rolling updates,

- etc.

Execution of a GKE cluster on the Google Cloud Compute Engine delivers a host of advanced cluster management features, including:

- Compute Engine load-balancing

- Availability of Node pools

- Automatic scaling

- Automatic upgrades to node cluster software

- Custer node auto-repair

- Logging and monitoring via the Google Cloud’s operations suite

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Architectural Framework

Architectural Use Case References (GCP-1 webpage)

Security & Compliance Options /

Binary K8S Auth

Appdev /

Microservices with GKE

*Ensures only trusted container images are deployed on Google Kubernetes

*Containerized microservices. Auto-scaling, auto-upgrade, auto-repair, via Google SRE’s

App Engine

App Engine is a fully managed, completely serverless platform for developing and hosting web applications delivered at scale. Multiple languages, libraries, and frameworks are available to develop desired applications. App Engine automatically handles provisioning servers and scaling assigned app instances based on user/system demand.

In accordance with the automatic provisioning of servers and scaling, App Engine abstracts the infrastructure, and provides the control surface at the application layer

Languages/frameworks/runtimes supported include;

- Go

- PHP

- Java

- Python

- Node.js

- .NET

- Ruby

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Architectural Framework

Architectural Use Case References (GCP-1 webpage)

AppDev /

Microservices with App Engine

AppDev /

Mobile Site Hosting

*App Engine Standard(PaaS). Python, Java, Go, NodeJS, PHP runtimes

*Firebase linked to App Engine as a backend app.

*Firebase linked to App Engine as a backend app.

*Firebase syncs across iOS, Android, Web. Processes data via App Engine.

Cloud Functions

GCP Cloud Functions delivers a lightweight compute solution for developers to create single-purpose, stand-alone functions that respond to Cloud events without any requirements to manage a server or runtime environment.

Cloud Functions can be written in Node.js, Python, Go, and Java, and are executed in language-specific runtimes.

The specific runtimes supported include;

- Node.js 8 Runtime

- Node.js 10 Runtime

- Python Runtime

- Go Runtime

- Java Runtime

There are two distinct types of Cloud Functions: HTTP functions and background functions

HTTP functions are invoked from standard HTTP requests. These HTTP requests wait for the response and support handling of common HTTP request methods like GET, PUT, POST, DELETE and OPTIONS. Deployment of Cloud Functions, triggers the automatic provisioning of a TLS certificate, so all HTTP functions can be invoked via a secure connection.

The other option, background functions are used to handle events from the Cloud infrastructure, such as messages on a Pub/Sub topic, or changes in a Cloud Storage bucket.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Architectural Framework

Architectural Use Case References (GCP-1 webpage)

AppDev &

Serverless /

*Serverless Web Scraping w/ Cloud Functions. Event-driven web scraping w/ Cloud Functions, Firestore & Scheduler. Built-in support for Headless Chrome, providing sophisticated UI testing & web scraping.

*Serverless, scalable, event-driven web scraping w/ Cloud Functions, Firestore & Scheduler

Cloud Console

GCP’s Cloud Console is a sophisticated web administration user-interface.

It provides the ability to access, view, and manage all facets of GCP’s cloud applications— including but not limited to;

- web applications

- data analysis

- virtual machines

- databases

- datastore

- networking &

- developer services.

GCP’s Cloud Console allows an administrator to deploy, scale, and diagnose production issues via the web-based interface.

An administrator may search to quickly find resources and connect to instances via SSH in the browser.

DevOps workflows can be administered when away from the office with built-in native iOS and Android applications.

Development tasks are accomplished via Cloud Shell.

.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud Console Options

Architectural Use Case References (GCP-1 webpage)

Cloud Console is universally used across GCP applications

Stackdrive is now Operations

GCP Stackdriver (now Operations) has been fully merged into the Cloud Console. It has been replaced with the Operations suite of products, which include;

- Cloud Logging

- Cloud Monitoring

- Cloud Trace

- Cloud Debugger

- Cloud Profiler

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Stackdriver is now GCP Operations

Stackdriver is now GCP Operations

Retail & eCommerce / PCI = Payment Card Industry

*Cloud Monitoring w/ StackDriver, Big Query & Cloud Logging.

Trace

GCP Trace, now called Cloud Trace, is now part of GCP’s Operations Suite.

Cloud Trace is a distributed tracing system that collects latency data from cloud applications and transfers it for display in the Google Cloud Console.

Using Cloud Trace, it is possible to review how requests propagate through an application, providing detailed quasi real-time performance insights.

Cloud Trace systematically reviews all of an application’s traces to generate in-depth latency reports.

Cloud Trace provides the capability to capture traces from all of a project’s VMs, containers, or App Engine projects.

Cloud Trace provides a variety of tools and filters you can quickly find the source and root cause of bottlenecks. This tool also continuously retrieves and analyzes trace data from a project, to highlight recent changes to performance, outputted to latency distributions. Latency distributions, obtained via Analysis Reports, can be compared by time-axis or version type. Significant changes in an application’s latency profile are automatically signaled via alerts.

Cloud Trace provides language-specific SDKs, and is currently available for;

- Java

- Node.js

- Ruby

- Go

Cloud Trace can analyze projects running on VM’s, regardless of whether they are maintained by the GCP. The Cloud Trace API can be used to submit and retrieve trace data from any source. . All projects running on App Engine are automatically captured.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Operations Cloud Trace

Cloud Trace is part of GCP Operations Suite

Use across GCP applications written in;

- *Java

- Node.js

- Ruby

- Go

Cloud Logging

GCP Logging, now called Cloud Logging, is now part of GCP Operations Suite.

Cloud Logging allows an administrator to store, search, analyze, monitor, and alert on log data and events from Google Cloud. The API also allows ingestion of any custom log data from any source. Cloud Logging is a fully managed service that delivers at scale and can ingest application and system log data from any number of VMs.

Cloud Logging works seamlessly with;

- Cloud Monitoring

- Cloud Trace

- Error Reporting, and

- Cloud Debugger

This linkage allows an administrator to navigate between incidents, charts, traces, errors, and logs. This facilitates determining the root cause of problems in the system/applications.

Cloud Logging is designed with cluster deployment and management built in. As a fully managed solution, it allows the administrator to focus on project construction, and not the details of administration.

Cloud Logging maintains data in one location, regardless of whether your solution is multi-cloud or your projects requires migration to another cloud.

Advanced log analysis, including the development of real time metrics, is delivered via the use of BigQuery. Generated metrics are transferrable to Cloud Monitoring, where they can be used to create dashboards.

Audit logs which capture all admin and data access events within Google Cloud, are retained for 400 days without additional charges. Logs can be stored for longer than 400 days, by exporting them to Cloud Storage.

Cloud Pub/Sub is used for integration with external systems, and the export of logs from GCP to them.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Operations Cloud Logging

Cloud Logging is part of GCP Operations Suite

Cloud Logging is used across GCP

*

Cloud Debugger

GCP Cloud Debugger is a built-in feature of Google Cloud that allows administrators to inspect the state of a running application in real time, without stopping or slowing it down. Cloud Debugger allows the capture of the call stack and variables at any location in the source code, without impacting response time for users.

Cloud Debugger can be deployed for used with production applications. A snapshot of an application captures the call stack and variables at a specific code location (logpoint) the first time any instance executes that code. This logpoint functions as if it were part of the deployed code, sending the log messages to the same log stream.

Cloud Debugger works with version control systems, such as;

- Cloud Source Repositories

- GitHub

- Bitbucket, or

- GitLab.

Cloud Debugger comprehends how to display the correct version of the source code when any of these version control system are used. When additional source repositories are used, the source files can be used part of the build-and-deploy process.

Cloud Debugger allows seamless collaboration with other members of your team, by sharing the debug session via the Console URL.

Cloud Debugger is integrated into existing development workflows. Debugging snapshots can be taken directly from;

- Cloud Logging

- error reporting

- dashboards

- integrated development environments(IDE’s), and the

- gcloud command-line interface.

Cloud Debugger is automatically enabled for all App Engine applications. It must be manually enabled for Google Kubernetes Engine(GKE) or Compute Engine.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Operations Cloud Debugger

Cloud Debugger is part of GCP Operations Suite

Cloud Monitoring

GCP Cloud Monitoring provides insights into the performance, uptime, and general health of all cloud-associated applications. It collects

- metrics

- events

- metadata

from;

- Google Cloud

- hosted uptime probes

- application instrumentation

and other common application components including

- Cassandra

- Nginx

- Apache Web Server &

- Elasticsearch

Cloud Monitoring processes the related data and generates insights via;

- dashboards

- charts

- alerts.

Cloud Monitoring alerting assists with oversight, by integrating with, and receiving notifications from;

- SMS

- Slack

- PagerDuty

Cloud Monitoring provides default dashboards for most Google Cloud services out-of-the-box and incorporates not only metrics but also critical metadata, which provides insights into the relationships between components. Cloud Monitoring also supports monitoring for non-GCP environments through partnerships, agents and API’s. Service Level Objectives (SLO’s) may be defined for applications, with SLO violations triggering alerts.

Lasty, Cloud Monitoring can provides information on the availability and uptime of internet-accessible;

- URLs

- VMs

- APIs, and

- load-balancers

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Operations Cloud Monitoring

Cloud Monitoring is part of GCP Operations Suite

Cloud Load Balancing

GCP Cloud Load Balancing allows the scaling of applications on Compute Engine from cold to active/hot instantaneously. Cloud Load Balancing supports the management of resources in one region, or multiple regions, to maximize availability and reduce latency. CLB also supports use of a single anycast IP, which front-ends all backend instances worldwide. It also supports intelligent preconfigured autoscaling.

It supports cross-region load balancing, including automatic multi-region failover, which allows for the transfer of traffic, in precisely measured amounts, in the event any backend were to signal performance issues.

Unlike DNS-based global load balancing solutions, Cloud Load Balancing reacts quickly and automatically to changes in users, traffic, network, backend performance, and related conditions.

Cloud Load Balancing is a fully distributed, software-defined, managed service for all traffic worldwide. It is not an instance, hardware or device-based solution, which would deliver a physical load balancing infrastructure along with high availability, and scale challenges.

Cloud Load Balancing can be applied to all traffic, including:

- HTTP(S)

- TCP/SSL &

- UDP

In addition to these standard protocols, Cloud Load Balancing provides support for the latest application delivery protocols, including:

- HTTP/2 with gRPC &

- QUIC support for GCP’s HTTPS load balancers

Cloud Load Balancing also supports the construction of internal load balancing solutions for internal client instances, without any exposure to the internet. This is accomplished via Andromeda, which is GCP’s

software defined network virtualization platform. Internal load balancing also provides support for clients across VPN’s.

Central management of SSL certificates and decryption is delivered via SSL offload. Encryption can also be enabled between the load balancing layer and the backend.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud Load Balancing Reference

Use Case / Deployment Configuration

Networking /

Latency optimized Travel Sample Architecture

DevOps/ Jenkins on k8s

Serve users from closest region to location, via Google’s Global Cloud Load Balancing

*Jenkins Namespace, Container Registry, Google Load Balancer

Cloud CDN

GCP Cloud CDN (Content Delivery Network) uses GCP’s globally distributed edge points of presence to cache external HTTP(S) load balanced content closer to your recipients. Caching content at the edges of GCP’s network provides faster delivery of content to users while reducing delivery costs.

GCP’s anycast architecture assigns to any given site a single global IP address, facilitating the delivery of consistent performance worldwide with an easier management interface. In addition, GCP’s edge cach(es) are peered with every major ISP end user globally, providing enhanced connectivity to users around the globe.

Consistent with its links to Cloud Load Balancing, Cloud CDN supports enhanced & recently introduced protocols, such as;

- HTTP/2 &

- QUIC

These two new protocols are targeted at delivering improved site performance for mobile users and/or users in emerging markets.

Cloud CDN is tightly integrated with Cloud Monitoring and Cloud Logging , delivering detailed latency metrics without customization, along with baseline HTTP request logs for deeper insight. These logs can be exported into Cloud Storage, and/or BigQuery for additional analysis with minimal effort.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud CDN Reference

Use Case / Deployment Configuration

Cloud CDN

.

*Cloud CDN is commonly used with Cloud Load Balancing

*

Cloud DNS

GCP Cloud DNS is a high-performance, resilient, global Domain Name System (DNS) service that publishes domain names to the global DNS database in an efficient manner.

DNS is a hierarchical distributed database that facilitates the storing of IP addresses and other data, and the retrieval of them by name. Cloud DNS facilitates the publication of zones and records in the DNS, without the burden of managing DNS servers and software that would be your direct responsibility.

Cloud DNS offers both public zones and private managed DNS zones. A public zone is visible to the public internet, while a private zone is visible only from one or more local VPC networks specified.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud DNS Reference

Use Case / Deployment Configuration

Cloud Load Balancing

.

*Cloud CDN is commonly used with Cloud Load Balancing

*

Firewall Rules

GCP Firewall Rules are defined at a network level, and only apply to the network where created. Any name chosen for them must be unique to the GCP project. Firewall rules can be applied across an organization, or a specific Virtual Private Cloud (VPC). Our discussion here will focus on application to the VPC.

VPC firewall rules determine whether to allow or deny connections to or from virtual machine (VM) instances, based on the configuration specification. Enabled VPC firewall rules are always enforced, protecting instances regardless of their configuration/operating system, whether or not they have started up.

Every VPC network functions effectively as a distributed firewall. While firewall rules are always defined at the network level, connections are allowed or denied on an instance by instance basis. VPC firewall rules enforce connections not just between a specific instances and other networks, but also between individual instances on the same network.

A VPC firewall rule specifies an individual VPC network and a set of components that define what that rule does. The set of components target certain types of traffic, based on the traffic’s:

- protocol

- ports

- sources &

- destinations

You create or modify VPC firewall rules by using:

- Cloud Console

- gcloud command-line tool &

- REST API

The target component of a firewall rule, defines the instances to which it is intended to apply. In addition to created firewall rules, GCP provides other rules that can affect incoming (ingress) or outgoing (egress) connections (not discussed further here). Each firewall rule applies to only an incoming (ingress) or outgoing (egress) connection, never both.

Firewall rules only support IPv4 connections, and not IPv6 connections. When defining a source for an ingress rule or a destination for an egress rule by address, you can only use an IPv4 address or IPv4 block in CIDR notation.

Each firewall rule’s action is either allow or deny . The rule applies to connections during any time period it is enforced . It is possible to disable a rule for troubleshooting purposes.

Once created, a firewall rule must be assigned to a VPC network. While the rule is enforced at the instance level, its configuration is always associated with a VPC network, and specific to that VPC network. Thus, firewall rules cannot be shared among VPC networks, including networks connected by VPC Network Peering or by using Cloud VPN tunnels .

GPC VPC firewall rules are stateful. A stateful firewall is a firewall that monitors the full state of active network connections. Thus, stateful firewalls are constantly analyzing the complete context of traffic and data packets that areseeking entry to a network, rather than discrete traffic and data packets in isolation.

Once a certain kind of traffic has been approved by a stateful firewall, it is added to a state table and can travel more freely into the protected network. Traffic and data packets that don’t successfully complete this required handshake will be blocked. By analyzing multiple factors before adding a type of connection to an approved list, such as TCP stages, stateful firewalls are able to observe traffic streams in their entirety. They are effectively more ‘intelligent’ than stateless firewalls, but are also more susceptible to DDoS attacks than stateless firewalls.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Firewall Rules Reference

Use Case / Deployment Configuration

Universal application

.

*Firewalls are an integrated and universal component of security for GCP

*

Cloud Interconnect

GCP Cloud Interconnect provides low latency, highly available connections that enable the reliable transfer of data between an on-premises facility and GCP Virtual Private Cloud (VPC) networks. In addition, Cloud Interconnect connections provide internal IP address linkage, which ensures that internal IP addresses are directly accessible from both networks. It is not necessary to use a NAT device or VPN tunnel to reach internal IP addresses

Cloud Interconnect offers two options for extending your on-premises network.

- Dedicated Interconnect &

- Partner Interconnect

Dedicated Interconnect provides a direct physical connection between an on-premises network and GCP’s VPC networks.

Partner Interconnect provides connectivity between an on-premises network and GCP VPC networks through a supported service provider.

For both Dedicated Interconnect & Partner Interconnect, traffic between the on-premises network and the GPC VPC network never traverse the public internet. Only direct dedicated connections are used. One of the advantages of bypassing the public internet, is that traffic takes fewer hops, providing fewer points of failure where the traffic might get dropped or disrupted.

Dedicated Interconnect, Partner Interconnect, and two other GCP options, Direct Peering , and Carrier Peering can optimize egress (exit) traffic from a GCP VPC network. In addition, each of these can reduce egress costs. Cloud VPN by itself does not reduce egress costs.

Cloud Interconnect along with Private Google Access for on-premises hosts enables on-premises hosts to use internal IP addresses rather than external IP addresses to reach Google APIs and services.

Cloud Interconnect provides very low latency and high availability, but with higher overhead & cost. A lower cost alternative is to use Cloud VPN to set up IPsec VPN tunnels between an onprem network and GCP VPC. IPsec VPN tunnels use the public internet, but encrypt the traversing data by using industry-standard IPsec protocols.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud Interconnect Reference

Use Case / Deployment Configuration

Universal application

.

*

*

Cloud VPN

GCP Cloud VPN securely connects an opnrem peer network to a Virtual Private Cloud (VPC) network through an IPsec VPN connection. Traffic traveling between the two networks is encrypted by one VPN gateway, and then decrypted by the other VPN gateway. This protects the data as it travels over the public internet. Another option is to connect two instances of Cloud VPN to each other.

GCP offers two types of Cloud VPN gateways:

- Classic VPN &

- HA VPN

Classic VPN gateways utilize:

- single interface

- single external IP address, &

- support tunnels using dynamic (BGP) or static routing (route based or policy based)

GCP Classic VPN‘s provide a SLA of 99.99% service availability.

When referenced in GCP API documentation or in gcloud commands, Classic VPNs are referred to as target VPN gateways.

Within GCP, major functionality for Classic VPN is being deprecating on October 31, 2021

The more recent offering from GCP in this area, is the HA VPN.

HA VPN is a high availability (HA) Cloud VPN solution that securely connects, in a single region, an on-prem network to a Virtual Private Cloud (VPC) network, through an IPsec VPN connection. HA VPN provides an SLA of 99.99% service availability, like the Classic VPN.

Each HA VPN gateway interfaces supports multiple tunnels. The administrator can also create multiple HA VPN gateways. It is possible to configure a HA VPN gateway with only one active interface and one public IP address, but this configuration does not provide a 99.99% service availability SLA.

In the GCP API documentation and in gcloud commands, HA VPN gateways are always referred to as VPN gateways rather than target VPN gateways (reserved for Classic VPN’s) . Forwarding rules for HA VPN gateways do not need to be manually created for HA VPN‘s, but do need to be created for Classic VPN‘s.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud VPN Reference

Use Case / Deployment Configuration

Universal application

.

*.

*

.

Cloud Bigtable

Cloud Bigtable

GCP Cloud Bigtable is a fully managed, scalable NoSQL database service targeted to service large analytical and operational workloads

The most appropriate data use cases for Cloud Bigtable are next :

- Time-series data, such as CPU and memory usage over time for multiple servers.

- Marketing data, such as purchase histories and customer preferences.

- Financial data, such as transaction histories, stock prices, and currency exchange rates.

- Internet of Things data, such as usage reports from energy meters and home appliances.

- Graph data, such as information about how users are connected to one another.

Cloud Bigtable stores data in massively scalable but sparsely populated tables. Each table is a sorted key/value map. These tables can scale to billions of rows and thousands of columns, enabling the storing of terabytes or even petabytes of data. A single value in each row is indexed – this value is known as the row key. Cloud Bigtable is ideal for storing very large amounts of single-keyed data with very low latency. It supports high read and write throughput at low latency, and it is an ideal data source for:

- MapReduce operations

- stream processing/analytics

- machine learning applications

Each row/column intersection can contain multiple cells, or versions, at different timestamps, providing a record of how the stored data has been altered over time. Cloud Bigtable tables are sparse – if a cell does not contain any data, it does not take up any space.

Cloud Bigtable is accessed by applications via multiple client libraries, including a supported extension to the Apache HBase library for Java . Consequently, it integrates well with the existing Apache ecosystem of open-source Big Data software.

Cloud Bigtable has a long history with GCP, and is built on proven infrastructure that powers a number of Google products, including Search & Maps.

The Microsoft equivalent of GCP’s Cloud Bigtable is the Azure Cosmos database,

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud Bigtable Applications

Use Case / Deployment Configuration

Energy / Oil and Gas

Healthcare

Retail & eCommerce / Beacons and Targeted Marketing

Database / Gaming Backend Database

Big Data / Time-series Analysis

*SCADA based, deploying Cloud IoT, Pub/Sub, Dataflow, Big Table, Data Studio

Business side uses Cloud SQL, Storage, ML Engine, Datalab

*Patient data via mobile device to Cloud Pub/Sub, to BigTable.

Adv analytics on stored data via Prediction API or Tensor Flow. Notifications.

*Beacon is a proximity notification. Uses Dataflow, Pub/Sub & BigTable

*Use Google Cloud Spanner for match history, Cloud Bigtable to log events

**OpenTSDB time series database engine on GKE to NoSQL db Cloud BigTable

Cloud Datastore

Cloud Datastore

GCP Cloud Datastore is a highly scalable NoSQL database. Cloud Datastore automatically handles sharding and replication, delivering a highly available and durable database that scales automatically to handle an application’s expanding load. Despite being a NoSQL database, Datastore provides a number of familiar capabilities, including;

- ACID transactions,

- SQL-like queries,

- indexes

Cloud Datastore utilizes a RESTful interface. Cloud Datastore can be used as the integration point for solutions that span across App Engine and Compute Engine.

Given that Cloud Datastore is a schemaless database, less emphasis needs to be placed on managing the underlying data structure as an application evolves. In addition, the query language is very simple.

Datastore supports a variety of data types, including:

- integers

- floating-point numbers

- strings

- dates &

- binary data

The supported programming languages include;

- .Net

- Go

- Java

- JavaScript (Node.js)PHP

- Python

- Ruby

Cloud Datastore users are being encouraged to migrate to Cloud Filestore, which became available on GCP in 2017.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud Datastore Applications

Use Case / Deployment Configuration

Serverless / Platform Services on App Engine

.

*Gaming on GCP, via RESTful HTTP endpoints. Cloud Datastore:Memcache front end, provides NoSQL db. GKE:Agones:OpenMatch to autoscale server resources.

*

Cloud Filesstore Applications

Use Case / Deployment Configuration

AppDev & Serverless / Serverless Web Scraping w/ Cloud Functions

.

*Event-driven web scraping w/ Cloud Functions, Firestore & Scheduler. Built-in support for Headless Chrome, providing sophisticated UI testing & web scraping.

Cloud Spanner

GCP Cloud Spanner

Cloud Spanner is a fully managed, mission-critical, relational database service that offers transactional consistency at world-wide scale, and:

- schemas

- ACID transactions

- SQL compatibillity (ANSI 2011 with extensions), &

- automatic, synchronous replication for high availability

Traditionally, when building cloud applications, dba’s & developers have been mandated to chose either ‘A’ or ‘B’:

- traditional relational databases that guarantee transactional (ACID) consistency, or

- NoSQL databases that offer easy horizontal scaling and data distribution.

Cloud Spanner is very unique, because it offers both of these critical requirements in a single, integrated, fully managed service. In addition, it functions as a ‘Database as a Service (DBaaS)’ offering.

Cloud Spanner keeps application development familiar to traditional relational DBA’s, by supporting standard tools and languages common to the traditional relational database environment. It’s an excellent solution for operational workloads supported by traditional relational databases, including;.

- inventory management

- financial transactions &

- e-commerce

Cloud Spanner supports:

- distributed transactions

- schemas and DDL statements

- SQL queries &

- JDBC drivers

Cloud Spanner provides client libraries for the most common languages, including:

- Java

- Go

- Python &

- Node.js.

Cloud Spanner provides key benefits to DBAs already on the Cloud, or seeking to transition to the cloud, as follows;

- Allows DBA’s to focus on application logic, by effectively abstracting the underlying hardware and software

- Allows the scaling out of RDBMS solutions without complex sharding or clustering

- Delivers horizontal scaling without migration from relational to NoSQL databases

- Delivers high availability disaster recovery without requiring a complex replication and failover infrastructure

The rough Microsoft equivalent to Cloud Spanner, is Cosmos Database.

The rough AWS equivalent to Cloud Spanner, is Amazon AWS Dynamo Database.

The table below provides a review of specific uses cases/deployment configurations referenced in the Google Cloud ‘Architectural Framework & Solution Scenarios’ discussed on this website at GCP-1 ;

Cloud Spanner Applications

Use Case / Deployment Configuration

Migrations /

Oracle to Cloud Spanner

AWS DynamoDB to Spanner

Database /

Oracle to Cloud Spanner

Gaming Backend Database

.

*Oracle db to CSV files to GCP’s Cloud Dataflow ETL & GCP’s Cloud Spanner

*AWS Dynamo DB migrated to GCP’s Cloud Spanner

*Oracle db via CSV files to GCP’s Cloud Dataflow ETL & GCP’s Cloud Spanner

*Use Google Cloud Spanner for match history, Cloud Bigtable to log events

*

Cloud SQL

Cloud SQL

GCP Cloud SQL is a fully managed relational database service for MySQL, PostgreSQL, and SQL Server. Specifically, these tradtional relational databases can be seemlessly installed on Cloud SQL.

Also, like Cloud Spanner, it is also DbaaS – Database as a Service.

For each of the above traditional relational databases, replication and backups are easily configured to protect data. Automatic failover, to create a High Availability(HA) solution, is also easily setup . Cloud SQL data is automatically encrypted, and Cloud SQL is fully compliant for:

- SSAE 16

- ISO 27001

- PCI DSS &

- HIPAA

Connections to the relational databases running on Cloud SQL, are possible from;

- App Engine

- Compute Engine

- Google Kubernetes Engine, &

- client workstations.

Lastly, sophisticated analytics can be obtained, by using BigQuery to directly query Cloud SQL based databases.

The table below provides a summary of the most important use case/deployment configuration for each of these options.

Cloud SQL Applications

Use Case / Deployment Configuration

To Cloud SQL solution, for :

Energy / Oil and Gas

Big Data / Real-Time Inventory

Retail & eCommerce / Real-Time Inventory

.

MySQL, PostgreSQL, SQL Server

*SCADA based, deploying Cloud IoT, Pub/Sub, Dataflow, Big Table, Data Studio

Business side uses Cloud SQL, Storage, ML Engine, Datalab

*Back Office Biz Apps to App Engine & Cloud SQL

*Back Office Biz Apps to App Engine & Cloud SQL via Cloud Pub/Sub

*

Cloud Storage

Cloud Storage

GCP Cloud Storage facilitates word-wide storage and retrieval of unlimited amounts of data, at virtually any time. Cloud storage can be deployed for a wide range of scenarios, including but not limited to:

- serving web content

- storing data for disaster recovery or archival

- distributing to users large data objects

GCP ‘cloud storage application niches’, are commonly divided into eight categories, as follows;

- Object or Blob Storage : Cloud storage

- Block Storage : Persistent disk

- Block Storage : Local SSD

- Archival Storage : Cloud Storage

- File Storage : Filestore

- Mobile Application : Cloud Storage for Firebase

- Data Transfer : Data Transfer Services

- Collaboration : Google Workplace Essentials

Each of these, along with their ‘best use’ application, will be discussed below;

- Object or Blob Storage : Cloud storage {global edge-caching and instant data access}

- Stream videos

- Image and web asset libraries, & construct

- Data lakesBlock Storage : Persistent disk

2.Block Storage : Persistent disk {virtual machines & containers}

- Disks for virtual machines

- Sharing read-only data across multiple virtual machines

- Rapid, durable backups of running virtual machines

- Storage for databases

3.Block Storage : Local SSD

{ Ephemeral locally-attached block storage for virtual machines & containers }

- Flash-optimized databases

- Hot caching layer for analytics

- Application scratch disk

4.Archival Storage : Cloud Storage

{ archival storage with high online access speeds }

- Backups

- Media archives

- Long-tail content

- Data with compliance requirements

5.File Storage : Filestore

{ scalable file storage w/ defined performance parameters }

- Data analytics

- Rendering and media processing

- Application migrations

- Web content management

6.Mobile Application : Cloud Storage for Firebase

{Scalable storage for user-generated content from Firebase }

- User-generated content

- Uploads over mobile networks

7.Data Transfer : Data Transfer Services

{offline, online, or cloud-to-cloud data transfer}

- Move ML/AI training datasets

- Migrate from S3 to Google Cloud

8.Collaboration : Google Workplace Essentials

{ Cloud-based content collaboration and storage }

- Access files from any location via web, apps, & sync clients

- Create & work on docs with coleagues

- Connect a team w/ secure video conferencing

Cloud Storage Applications

Use Case / Deployment Configuration

Energy / Oil and Gas

Serverless / Event Driven

Big Data / Data Lake

Data Warehouse / Data Lake

.

*SCADA based, deploying Cloud IoT, Pub/Sub, Dataflow, Big Table, Data Studio

Business side uses Cloud SQL, Storage, ML Engine, Datalab

*Event Source, to Cloud Pub/Sub, to Archiver Cloud Functions, to Cloud Storage Data Archive

*Cloud Storage

*Cloud Storage

*

Big Query

Big Query

GCP Big Query is an updated, modern enterprise data warehousing solution which supports massive datasets. In order to query these massive datasets cost effectively and expeditiously, specific hardware and infrastructure must be deployed. Big Query resolves this issue by being ‘serverless’.

BigQuery solves this problem by constructing and executing super-fast SQL queries.

BigQuery is fully-managed by GCP. Activation requires no actions to deploy resources, such as disks and virtual machines.

BigQuery access includes the following options:

- Cloud Console

- bq command-line tool

- by making calls to BigQuery REST API

Client libraries including:

- Java

- .NET &

- Python.

are useable with the REST API. Other third-party tools can be used to interact with Biq Query (not listed here).

Four application ‘flavors’ are commonly utilized with Big Query:

- BigQuery ML

- BigQuery GIS

- Big Query BI engine

- Connected Sheets

Big Query ML (Machine Learning)

BigQuery ML facilitates the construction and operation of ML models on structured or semi-structured data, by data scientists and data analysts. This is accomplished using basic SQL. Ten different model types are supported.

Big Query GIS

BigQuery GIS provides the serverless architecture of BigQuery with native support for geospatial analysis. This merges analytics workflows with geographic location intelligence. This application supports:

- arbitrary points

- lines

- polygons, & multi-polygons

displayed in common geospatial data formats

BigQuery BI Engine

BigQuery BI Engine is fast in-memory analysis service that allows users to analyze large and complex datasets interactively with fractional-second query response time and high concurrency.

BigQuery Connected Sheets

Connected Sheets allows users to analyze billions of rows of live BigQuery data in Google Sheets without the application of SQL knowledge. Users can apply familiar tools—like pivot tables, charts, and formulas—to easily derive insights from big data.

The table below provides a summary of the most important use case/deployment configuration for each of these options.

BigQuery Applications

Use Case / Deployment Configuration

Healthcare / Genomics, Secondary Analysis

Variant Analysis

Radiological Image Extraction

Big Data / Data Warehouse Modernization

Log Processing

Data Warehouse, Retail & eCommerce / Shopping Cart Analysis

Financial Services / Time Series Analysis

Retail & eCommerce / PCI

Healthcare API Analytics

*Sequencers data to Ingest Server; metadata to Cloud SQL, raw data to GCS

Sequence to BAM files. Accessed via Jupyter notebooks, BigQuery analysis

*Genomics API using Big Data, to FASTQ or BAM. Private or shared datasets.

Batch analysis using Cloud Dataflow, interactive via Big Query & DataLab

*Cloud Healthcare API, Pub/Sub, Storage, to Cloud Dataflow , Dataproc to

BigQuery to Cloud DataLab

*DICOM API, to Imaging Analytics, to BigQuery, Cloud ML, Dataproc, DataLab

*BigQuery DW & ETL/ELT via Cloud Dataflow/Dataproc /Composer

*StackDriver to Dataflow to BigQuery

*Analyze customer behavior(heuristics) via Cloud Dataproc, Dataflow, detail analtyics via BigQuery

*BigQuery & DataLab

*Cloud Monitoring w/ StackDriver, Big Query & Cloud Logging. PCI = Payment Card Industry

Cloud Dataflow

Cloud Dataflow

GCP Cloud Dataflow is a fully managed service and serverless processing service for executing Apache Beam pipelines within the GCP ecosystem.

When a job executes on Cloud Dataflow, it spins up a cluster of virtual machines, distributes the tasks in the job to the VMs, and dynamically scales the cluster based on how the job is performing. Routinely, it changes the order of operations in the processing pipeline to optimize the job.

Apache Beam data processing jobs run on Cloud Dataflow may be either batch and streaming jobs. Tasks commonly performed to output results include:

- Write a data processing program in Java using Apache Beam

- Use different Beam transforms to map and aggregate data

- Use windows, timestamps, and triggers to process streaming data

- Deploy a Beam pipeline both locally and on Cloud Dataflow

- Output data from Cloud Dataflow to Google BigQuery

The table below provides a summary of the most important use case/ deployment configuration for each of these options.

Migrations , Database / Oracle to Cloud Spanner

Healthcare / Variant Analysis

Healthcare API Analytics

Big Data /

Data Warehouse Modernization

Data Warehouse / Shopping Cart Analysis

Retail & eCommerce / Beacons and Targeted Marketing

Shopping Cart Analysis

Log Processing

*Oracle db to CSV files to GCP’s Cloud Dataflow ETL & GCP’s Cloud Spanner

*Genomics API using Big Data, to FASTQ or BAM. Private or shared datasets.

Batch analysis using Cloud Dataflow, interactive via Big Query & DataLab

*Cloud Healthcare API, Pub/Sub, Storage, to Cloud Dataflow , Dataproc to

BigQuery to Cloud DataLab

*BigQuery DW & ETL/ELT via Cloud Dataflow/Dataproc /Composer

*StackDriver to Dataflow to BigQuery

*Analyze customer behavior(heuristics) via Cloud Dataproc, Dataflow, detail analtyics via BigQuery

*Beacon is a proximity notification. Uses Dataflow, Pub/Sub & BigTable

*Analyze customer behavior(heuristics) via Cloud Dataproc, Dataflow, detail analtyics via BigQuery

Cloud Dataprep

GCP Cloud Dataprep, is an intelligent data preparation tool, used to visually explore, clean and prepare both structured and unstructured data for review, reporting, and machine learning. Given that Cloud Dataprep is serverless, regardless of the scale of the application, there is never any infrastructure to deploy or manage.

Cloud Dataprep is provided by a Google partner, Trifacta.

Cloud Dataprep is unique, given that each UI input by the user triggers a data transformation recommendation. This abrogates the need to write code.

Cloud Dataprep automatically detects:

- schemas

- data types

- possible joins, &

- anomalies

such as:

- missing values,

- outliers, &

- duplicates

This real time feedback allows the user to minimize the time-consuming work of assessing data quality, and instead focus on exploration and analysis. Specifically, the feedback generated by Cloud Dataprep is based on a proprietary inference algorithm to interpret the data transformation intent of a user’s data selection.

The user does have major final input into the nature and sequence of data transformations.

Once the user has defined the nature and sequence of data transformations, Cloud Dataprep calls Cloud Dataflow behind the scenes, triggering the processing of structured or unstructured datasets in Cloud Dataflow.

The table below provides a summary of the most important use case/deployment configuration for each of these options.

Cloud Dataprep Applications

Use Case / Deployment Configuration

Cloud Dataprep is serverless

.

*Cloud Dataprep calls Cloud Dataflow to trigger the processing of data

*

Cloud Dataproc

GCP Dataproc is a managed Spark and Hadoop service that provides open source data tools for:

- batch processing

- querying

- streaming, &

- machine learning

Dataproc automation facilitates the quick creation of clusters, and management of them. Dataproc saves money by turning clusters off when they are no longer needed.

Dataproc provides built-in integration with other GCP services, including;

- BigQuery

- Cloud Storage

- Cloud Bigtable

- Cloud Logging &

- Cloud Monitoring

This integration delivers a complete data platform to support your Spark or Hadoop cluster.

Dataproc supports the following open source applications;

- Hadoop

- Spark

- Hive &

- Pig

Dataproc can be accessed in these ways, via:

- REST API

- Cloud SDK

- Dataproc UI

- Cloud Client Libraries

The table below provides a summary of the most important use case/deployment configurations for each of these options.

Cloud Dataproc Applications

Use Case / Deployment Configuration

Healthcare /

Healthcare API Analytics

Radiological Image Extraction

Big Data / Data Warehouse Modernization

Data Warehouse / Shopping Cart Analysis

AI & ML / Recommendation Engines

Retail & eCommerce / Fraud Detection,

Shopping Cart Analysis

Financial Services / Monte Carlo Simulations,

Fraud Detection /

*Cloud Healthcare API, Pub/Sub, Storage, to Cloud Dataflow , Dataproc to

BigQuery to Cloud DataLab

DICOM API, to Imaging Analytics, to BigQuery, Cloud ML, Dataproc, DataLab

*BigQuery DW & ETL/ELT via Cloud Dataflow/Dataproc /Composer

*Analyze customer behavior(heuristics) via Cloud Dataproc, Dataflow , detail analtyics via BigQuery

*GCP Prediction API to train regression /classification models & generate realtime predictions..OR Spark MLlib sourced custom machine learning algorithms, deployed to Cloud Dataproc

*GCP Prediction API to train regression /classification models & generate realtime predictions..OR Spark MLlib sourced custom machine learning algorithms, deployed to Cloud Dataproc

*Analyze customer behavior(heuristics) via Cloud Dataproc, Dataflow, detail analtyics via BigQuery

*Dataproc & Apache Spark provide infrastructure, capacity to run Monte Carlo simulations written in Java, Python, or Scala.

*GCP Prediction API to train regression /classification models & generate realtime predictions..OR Spark MLlib sourced custom machine learning algorithms, deployed to Cloud Dataproc

Cloud IoT Core

The GCP Cloud IoT Core utilizes the following concepts to function:

Internet of Things (IoT) : Any physical object connected to the internet (directly or indirectly) that has the ability to exchange data without user involvement.

Device:Any processing unit that is capable of connecting to the internet and exchanging data with the cloud. These devices are routinely referred to as “smart devices” or “connected devices.” These devices send two types of data:

- telemetry &

- state

Telemetry:Any and all event data sent from devices to the cloud. Event data sent commonly provides measurements about the local environment. Telemetry data can be analyzed by GCP Big Data solutions.

Device state: User defined aggregation of data, that describes the current status of the device. This data can be structured or unstructured, but always only flows from the device to the cloud, never in reverse.

Device configuration: User defined aggregation of data, commonly used to change a device’s settings. This data can be structured or unstructured, but always only flows from the cloud to the device, never in reverse.

Device registry: A container of devices with shared properties. A device is “registered” with a service (e.g. Cloud IoT Core) so that it may be managed by it.

Device manager: Service used to monitor device health and activity, update device configurations, & manage credentials & authentication.

MQTT(Message Queue Telemetry Transport): An industry-standard IoT protocol . MQTT is a publish/subscribe (pub/sub) messaging protocol.

Cloud IoT Core Components: Device manager & protocol bridges.

Two protocol bridges are available for devices to connect to GCP:

- MQTT

- HTPP

Cloud IoT Core is the GCP fully managed service, utilizing all of the above components, to readily and securely connect, manage, and ingest data from globally dispersed devices.

The table below provides a summary of the most important use case/deployment configuration for each of these options.

Cloud IoT Core Applications

Use Case / Deployment Configuration

IoT /

IoT Remote Monitoring

IoT MQTT Bridge

Smart Home Devices

Cloud to Edge ML

AI & ML / Chatbot with Dialogflow

*Cloud IoT Core to connect IoT devices via MQTT or HTTP bridge to GCP.

*Devices of any size may connect thru secured, bidirectional MQTT bridge.

*Smart Home actions controls IoT devices thru Google Assistant. MQTT or HTTP bridge(s) connect IoT devices to GCP using per-device public/private key auth.

*Cloud IoT Edge extends GCP data processing and machine learning to gateways, cameras, and other connected devices.

*Dialogflow is an end-to-end, create-once, and deploy-anywhere development suite for creating conversational interfaces for websites/mobile apps/messaging platforms, & IoT devices

Cloud Pub-Sub

GCP Pub/Sub is an asynchronous messaging service that decouples production events from processing events, for all of the services that are involved.

Pub/Sub offers durable and real time message storage and delivery with high availability and reliable performance at scale. Of course, Pub/Sub servers run in of the GCP regions around the world.

Core concepts of Pub/Sub

Topic: A named resource to which messages are sent by publishers.

Subscription: A named resource representing the total stream of messages from a single, specific topic, to be delivered to a subscribing application.

Message: The combination of data and (optional) attributes that a publisher sends to a topic and is eventually delivered to subscribers.

Message attribute: A key-value pair that a publisher can define for a message.

Publisher-subscriber relationships

A publisher application creates and sends messages to a topic. Subscriber applications create a subscription to a topic to receive messages from it. Communication can be;

- one-to-many (fan-out)

- many-to-one (fan-in) &

- many-to-many

Common use cases for Pub/Sub

- Balancing workloads in network clusters

- Implementing asynchronous workflows

- Distributing event notifications

- Refreshing distributed caches

- Logging to multiple systems

- Data streaming from various processes or device

- Reliability improvement

Common GCP services on the ‘send’ side of Pub/Sub include;

- Cloud Logs

- Cloud API

- Cloud Dataflow

- Cloud Storage

- Compute Engine

Common GCP services on the ‘receive’ side of Pub/Sub include;

- Cloud Networking

- Compute Engine

- Cloud Dataflow

- App Engine

- Cloud Monitoring

The table below provides a summary of the most important use case for each of these options.

Cloud Pub/Sub Applications

Use Case / Deployment Configuration

Energy / Oil and Gas

Healthcare / Patient Monitoring

Healthcare API Analytics

Healthcare API ML

Serverless / Event Driven

Retail & eCommerce / Real-Time Inventory

Beacons and Targeted Marketing

*SCADA based, deploying Cloud IoT, Pub/Sub, Dataflow, Big Table, Data Studio Business side uses Cloud SQL, Storage, ML Engine, Datalab

*Patient data via mobile device to Cloud Pub/Sub, to BigTable. Adv analytics on stored data via Prediction API or Tensor Flow. Notifications.

*Cloud Healthcare API, Pub/Sub, Storage, to Cloud Dataflow , Dataproc to BigQuery to Cloud DataLab

*Machine Learning, to Cloud Pub/Sub, to ML models, to Enterprise Viewer

*Event Source, to Cloud Pub/Sub, to Archiver Cloud Functions, to Cloud Storage Data Archive

*Back Office Biz Apps to App Engine & Cloud SQL via Cloud Pub/Sub

*Beacon is a proximity notification. Uses Dataflow, Pub/Sub & BigTable

Cloud IAM

GCP IAM allows an administrator to grant granular access to specific GCP resources and simultaneously prevents access to other resources. As a baseline, IAM adopts the security principle of least privilege, in which only the minimum and necessary permissions are granted to access specific resources.

How IAM functions

Via IAM, the administrator manages access control by defining

- who (identity) has

- what access (role) for

- which resource

The organizations, folders, and projects that used to organize relevant resources are also resources.

Via IAM, permission to access any given resource are never granted directly to the end user. Instead;

- permissions are grouped into roles, &

- roles are granted to authenticated members

An IAM policy defines and enforces:

- what roles are granted to

- which members, &

- this policy is attached to a resource

When an authenticated member attempts to access a resource, IAM checks the resource’s policy to determine whether the action is permitted.

IAM access management has three main parts, Member, Role & Policy, defined as follow;:

*Member. A member can be a:

- Google Account (for end users), a

- service account (for apps and virtual machines), a

- Google group, or a

- Google Workspace or Cloud Identity domain

that can access a resource.

The identity of a member is an email address associated with a

- user,

- service account, or

- Google group; or a

- domain name associated with Google Workspace or Cloud Identity domains.

*Role. A role is a collection of permissions. Permissions determine what operations are allowed on a resource. When administrators grant a role to a member, they grant all the permissions that the role contains.

*Policy. An IAM policy binds one or more members to a role. When an administrator seeks to define;

- who (which member) has

- what type of access (role)

on a resource, the administrator creates a:

- policy &

- attaches it to the resource.

The table below provides a summary of the most important use case for each of these options.

Cloud IAM Applications

Use Case / Deployment Configuration

Cloud IAM (Identity & Access Management) is a universal security mechanism across GCP.

.

*GCP Cloud IAM enforces security access by defining Members, Roles, & Policies.

Cloud Endpoints

GCP Cloud Endpoints provides a mechanism for an administrator to develop, deploy, protect, and monitor APIs . Cloud Endpoints is a distributed API management system, based on OpenAPI v2, defined for Rest API’s. It comprises:

- services

- runtimes, &

- tools

Cloud Endpoints provides;

- management

- monitoring, &

- authentication, along with

- high performance

The developer effectively ‘offloads’ responsibility for delivering these to this distributed API management system, under the province of GCP.

The components that make up Cloud Endpoints are:

- Extensible Service Proxy (ESP) or Extensible Service Proxy V2 Beta (ESPv2 Beta) – for injecting Cloud Endpoints functionality.

- Service Control – for applying API management rules

- Service Management – for configuring API management rules

- Cloud SDK – for deploying and management

- Google Cloud Console – for logging, monitoring and sharing.

Cloud Endpoints is an NGINX-based proxy and distributed architecture, which uses an OpenAPI Specification.

Cloud Endpoints links with Cloud Monitoring, Cloud Logging, and Cloud Trace to provide operational insights. Data can, of course, be transferred to Big Query for further analysis.

API access and validation is accomplished via JSON Web Tokens along with Google API Keys. The identity of users of the web or mobile application is obtained via Auth0 and Firebase Authentication.

The table below provides a summary of the most important use case/deployment configuration for each of these options.

Application

Use Case / Deployment Configuration

Cloud Endpoints is a distributed API management system, and can be deployed on GCP anytime an OpenAPI v2 solution, defined for Rest API’s, is sought.

.

*

*

*

*

*

VPC

A VPC network on GCP, can roughly be viewed the same way as a physical network, except that it is a virtualized solution within GCP. A VPC network is a global resource that consists of a:

- list of regional virtual subnetworks (subnets) in data centers,

- all connected by a global wide area network.

VPC networks are logically isolated from each other in GCP.

All new GPC projects start with a default network (an auto mode VPC network) that has one subnetwork (subnet)specified in each region.

VPC‘s provides networking functionality to:

- Compute Engine virtual machine (VM) instances,

- Kubernetes Engine (GKE) clusters &

- App Engine flexible environment, &

- all other GCP products built on Compute Engine VM’s

In addition, a VPC network provides the following:

- Native Internal TCP/UDP Load Balancing and proxy systems for Internal HTTP(S) Load Balancing.

- Connections to on-premises networks using Cloud VPN tunnels and Cloud Interconnect attachments.

- Traffic distribution from GCP external load balancers to backends.

Every VPC network implements a distributed virtual firewall that is configurable. Firewall rules acontrol which packets are allowed to travel to which destinations. Every VPC network operates with two implied firewall rules:

- block all incoming connections, &

- allow all outgoing connections

Defined routes specify how traffic is sent from an instance to a destination, either inside the network or outside of GCP. Each VPC network default configuration specifies some system generated routes to send traffic:

- among its subnets &

- from eligible instances to the internet

While routes govern traffic departing an instance, forwarding rules direct traffic to a GCP resource located in a VPC network based on:

- IP address

- protocol, &

- port

The destinations for forwarding rules are:

- target instances,

- load balancer targets (target proxies, pools, & backend svcs), &

- Cloud VPN gateways.

The table below provides a summary of the most important use case for each of these options.

Applications

Use Case / Deployment Configuration

GPC VPC’s provide virtualized networking functionality

.

.

.

.

.

VPC‘s provides networking functionality to:

- Compute Engine virtual machine (VM) instances,

- Kubernetes Engine (GKE) clusters &

- App Engine flexible environment, &

- all other GCP products built on Compute Engine VM’s

All new GPC projects start with a default network (an auto mode VPC network) that has one subnetwork (subnet) specified in each region.

*

Identity Aware Proxy

GCP IAP facilitates the construction of a central authorization layer for applications accessed by HTTPS. This approach replaces traditional reliance on network-level firewalls, with an application-level access control model.

IAP policies are designed to scale across an organization. IAP policies may be defined centrally and then applied across all applications and resources. By definition, IAP is used to enforce access control policies for all applications and resources.

When an application or resource is protected by IAP, it can only be accessed through the proxy by:

- members , also known as users, who must have the

- correct Identity and Access Management (IAM) role

A user granted access to an application or resource by IAP, is automatically subjected to the fine-grained access controls implemented by the application, without requiring a VPN. When a user tries to access a IAP-secured resource, IAP performs both

- authentication &

- authorization

checks.

Requests for GCP resources come through

- App Engine or

- Cloud Load Balancing (HTTPS)

The first step, involving authentication, requires that the serving infrastructure code for these products/applications determines if IAP is enabled for the app/backend service. If so, information about the protected resource is sent to the IAP authentication server. The information that may be included covers:

- GCP project number,

- request URL, & any

- IAP credentials in request headers or cookies

For the next step, IAP checks the user’s browser credentials. If none exist, the user is:

- redirected to an OAuth 2.0 Google Account sign-in flow that

- stores a token in a browser cookie for future sign-ins.

Assuming valid request credentials, these are then used by the authentication server to get the user’s identity (email address & user ID). The authentication server then uses the identity to check the user’s IAM role and validate that the user is authorized to access the resource.

The next step after authentication is authorization. During this step, IAP applies the relevant IAM policy to confirm that the user is authorized to access the requested resource. This user authorization must take the form of the IAP-secured Web App User role on the Cloud Console project where the resource exists. If the user possesses this role, they’re authorized to access the application. Changes to the IAP-secured Web App User role list are executed via the IAP panel on the Cloud Console .

The table below provides a summary of the most important use case for each of these options.

IAP Application

Use Case / Deployment Configuration

Identity Aware Proxy (IaP) works with IAM to enforce access control policies for all apps & resources.

.

*The IAP-secured Web App User role is central here for authorization success.

*

*

KMS

KMS stands for ‘Key Management Service’.

Data stored on GCP is encrypted at rest. Use of the Cloud Key Management Service (Cloud KMS) platform provides greater control over how:

- data is encrypted at rest, & how the

- encryption keys are managed.

The Cloud KMS platform allows GCP customers to manage cryptographic keys in a central cloud service for either:

- direct use, or

- use by other cloud resources & apps

There are two types of software based encryption keys:

- customer-managed encryption keys (CMEK)

- customer-supplied encryption keys (CSEK)

Cloud KMS provides the following options for key generation:

- Cloud KMS software backend provides the flexibility to encrypt data with either a symmetric or asymmetric key that is directly controlled ( Cloud KMS ).

- Hardware keys, are obtained via validated Hardware Security Modules (Cloud HSM ‘s) .

- Cloud KMS provides for the import of customer generated cryptographic keys.

- Another option is to use keys generated by Cloud KMS with other GCP services. These keys are referred to as customer-managed encryption keys (CMEK). This CMEK feature provides for the generation, use, rotation, and destruction of encryption keys deployed to help protect data in other GCP services.

- Another facility, the Cloud External Key Manager (Cloud EKM) , provides for the creation and management of keys in a key manager located externally to GCP. The Cloud KMS platform may then use these external keys to protect data at rest.

- Customer-managed encryption keys may be used with a Cloud EKM key.

GCP allows for the use of customer-supplied encryption keys (CSEK) for both:

- Compute Engine &

- Cloud Storage

Under this arrangement, data is decrypted and encrypted using a key that’s provided on an API call.

Cloud KMS provides the following functionality:

- Customer control

- Access control and monitoring

- Regionalization limits & assignment

- Durability (eleven 9’s)

- Security

The table below provides a summary of the most important use case for each of these options.

KMS Application

Use Case / Deployment Configuration

KMS, ‘Key Management Service’, provides control over how data is encrypted at rest, and how encryption keys are managed.

*KMS supports two types of software based encryption keys:

- customer-managed encryption keys (CMEK)

- customer-supplied encryption keys (CSEK)

*

Data Loss Prevention

GCP ‘Data Loss Prevention’ (Cloud DLP) is a fully managed service designed to facilitate the:

- discovery

- classification, &

- protection

of an organization’s most sensitive data.

Cloud DLP provides for:

- Inspection of structured or unstructured data, to facilitate transformation

- Reduction in data risk through data de-identification via masking and tokenization

Cloud DLP supports over 120 built-in information types, covering both structured and unstructured data.

Cloud DLP provides native support for scanning and classifying sensitive data in:

- Cloud Storage

- Cloud BigQuery

- Cloud Datastore & a

- streaming content API

The streaming content API provides support for additional data sources, custom workloads, and applications.

Cloud DLP provides tools to

- classify

- mask

- tokenize, &

- transform

all sensitive covered data elements.

Cloud Data Loss Prevention (DLP) API

The Cloud Data Loss Prevention (DLP) API Provides methods for detection of privacy-sensitive fragments in:

- text

- images, &

- GCP storage repositories

The table below provides a summary of the most important use case for each of these options.

Data Loss Prevention (DLP) Application

Use Case / Deployment Configuration

Cloud Data Loss Prevention (DLP)provides for reduction in data risk, thru data de-identification.

*Cloud DLP provides native support for scanning and classifying sensitive data in:

- Cloud Storage

- Cloud BigQuery

- Cloud Datastore & a

- streaming content API

*

Cloud ML

GCP Cloud ML has been subsumed by the GCP AI Platform, which provides access to the Cloud ML Engine.

The GCP AI End-to-end platform, targeted at data science and machine learning, facilitates the streamlining of ML workflows constructed by developers, data scientists, and data engineers.

The advanced AutoML option provides point-and-click workflow construction. State of the art applications may be developed via additional GCP tools:

- TPUs

- TensorFlow

The starting point is to prepare and store datasets is with BigQuery, then use the embedded Data Labeling Service to label project training data by applying:

- classification

- object detection, &

- entity extraction

for:

- images

- videos

- audio

- text.

Model validation may be accomplished with AI Explanations. This application:

- provides inputs into the model’s outputs

- verifies the model behavior

- discovers bias in the model

- provides insights into ways to improve the model and related training data

Another, more sophisticated application, AI Platform Vizier, may be deployed as a ‘black box’ optimization service. This service will:

- help tune hyperparameters

- optimize the model’s output

Several tools are available for deployment optimization, including:

- AI Platform Prediction

- AutoML Vision Edge

- TensorFlow Enterprise

- MLOps

AI Platform Prediction that manages the infrastructure needed to deploye and run your model and makes it available for both online and batch prediction requests. You can also use

AutoML Vision Edge can be used to deploy models and trigger real-time actions based on local data.

TensorFlow Enterprise offers high-end support for a TensorFlow instance.